A/B testing is the most common, simple and effective technique to improve the performance of marketing campaigns.

Yet so many marketeers take unclear conclusions and wrong decisions that might lead to decreasing performances over time.

By following these 4 simple rules and good practices, any marketeer should be able to successfully plan, implement and validate any A/B testing.

1. Have clear Hypothesis

Prior to starting any A/B test it is essential to set clear and logical hypothesis, letting you bet in advance which KPIs should be impacted versus your control segment.

Depending on the element of the campaign you are willing to test (copywriting, landing page…) you have guess what will be the main KPIs that might change, and which one should not change.

All we’re talking here is good sense and logic.

If you are testing a new landing page, any metric before the visits should obviously not change. If you’re testing a new banner creative, the CTR% will usually be the main metric to look at. However, all the KPIs further down the conversion funnel might also be impacted and should be taken into account.

Example of Hypothesis

- Landing page test : “Increase the Conversion Rate”

- Creative test : “Increase the CTR% while maintain Conversion Rate” or “Increase the Conversion Rate while keeping a good CTR%” (in the case you test a more descriptive creative).

2. Look for Big Winners

The volume of test samples is critical to validate the accuracy of any A/B test you will do.

The smaller the result variation will be, the more volume you will need. More volume means more time to get results, more budget to be spent, and more time without applying results and start new tests. It is important to get reliable results as fast as possible.

So aim big. Be bold on your tests and aim for a minimum 15% variation, especially if you are at the beginning of the A/B testing phase. The bigger the variation is, the fastest and more reliable the conclusion will be.

In addition to having clear results — positive or negative — you will understand much better the logic behind the results and gain marketing expertise to improve future testings. Understanding your test results is the most important for long term improvements.

3. Test few Things

A frequent mistake is to test too many things at the same time, and get lost in the testing process.

If you want to test 5 landing pages, 4 banners or different Call to Actions, you should not do everything at the same time. Testing a color and copywriting change in the same creative is not the smartest thing to do. Whatever the result would be you won’t understand whether the lift was due to the color or the copy. Test both individually against your control creative is the right thing to do.

Testing many things is possible, but it should be done over time following a clear A/B test planning that will allow you improve performance step by step.

It might also happen that you don’t get any significative improvements, even with plenty of volume. Don’t wait to much, this simply means your test doesn’t have much effect on the metrics. Move on.

Reaching a clear conclusion is not only important to improve numbers but also to improve your direct marketing knowledge. If you understand well what you do and what happens, you will get better and better over time at A/B testing.

4. Validate Results

Validating the results of each consecutive A/B Test with the maximum level of confidence is the most important part. If you take the wrong conclusion for one or various tests, you might end up spending time and energy generating the opposite effect of what you wanted.

Make sure you use Random Segments

The first thing you have to look at is the segments you are using for your A/B tests. Test segments must be perfectly random in terms of audience, and timeframe.

Most modern display advertising platforms provide good A/B testing features but make sure the volume is delivered on the exact same audience, and look at report on the exact same timeframe, starting the day when your started your test (or next day if you can’t filter by hours).

By setting up your hypothesis before having any results and knowing which KPIs should not change will help you detect if a test wasn’t executed in normal conditions.

Statistically Validate the results

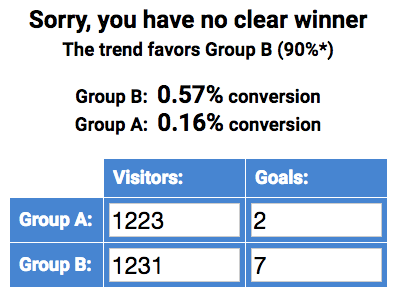

This is THE most usual mistake unexperienced marketeers do : taking conclusions on tests that are not statistically significant due to lack of volume.

Even with 2000+ clicks, if only 9 Leads have been generated in total, it is not possible to take any conclusion. You need a minimum volume of conversions.

To be confident about your test, don’t use gut feelings and use an A/B Test validator like this one : http://drpete.co/split-test-calculator

As an advice, try to get at least 100 conversions (leads, orders) on each segment with a +15% difference. This will be about the limit when result become significant. If you lack volume, take into account the trend and compare it to your hypothesis.

Challenge the results

If the results look very different from what you’d expect in your hypothesis, make sure to double verify all steps of the test or even run the test again.

Summary

- Start with a defined “control” segment (IE “the control creative”)

- Set prior hypothesis to guess which metrics should change and which should not.

- Take into account the whole user funnel, further down the step where your test is done.

- Aim big. Be bold. Don’t test tiny variations.

- Make sure the test is delivered on random segment during the whole test timespan.

- Statistically validate the results. Don’t judge with gut feelings.

- Don’t test everything at the same time. Plan Step by Step testing and learning.

- Learn, understand, be confident about your conclusions.

Reach us at Powerspace for help on setting up your A/B tests.